Bob Ross Lives! - workshop on creative potential of GANs by Lenka Hamosova and Pavol Rusnak

This workshop came in 2019, during a time when deepfakes were already old news and the abundance of new AI-generated media production tools were just entering the Internet. The bar for the creation of synthetic media got lowered significantly and in some cases it did not require any coding skills anymore. However, this was the best time to approach and test the current tools with a group of curious hackers and designers—before the tools got defined by large players in the media industry. The ability to break the rules, combined with an experimental attitude in trying out what works and what doesn’t, and— last but not least—conceptualizing the ethical aftermaths of future use cases of synthetic media, were the ingredients we were hoping to merge together during our workshop. Embracing the creative nature of such an activity, especially welcoming any errors and mistakes, we called upon the legacy of Bob Ross—one of the best teachers, promoter of tutorial-guided creativity, true ASMR YouTuber before YouTube, and generator of countless calming nature scenes.

Strange Attractions by Lenka Hamosova

Strange Attractions by Lenka Hamosova

In the context of the latest development in deep learning, and specifically in Generative Adversarial Networks (GANs),[1] we read a lot about the negative side of these tools being widely accessible. The media focuses mainly on the threat of deepfakes and their consequences on politics or privacy, which generates unreasonable fear and prejudice towards any new synthetic media. The prevailing opinion, that the advancements in AI-powered visual generative tools will bring only harm and confusion in visual communication, will not help to avoid these scenarios but in fact do the opposite. What our society needs instead is to see how it might as well benefit from appropriating these tools and getting engaged in the process of exploring the new creative possibilities as soon as possible. There are many, as of yet unthought, positive applications of deep learning models able to generate photorealistic visuals; however, it might be challenging to see them when focusing only on their malicious uses.

To create a balanced discussion about the consequences of synthetic media becoming a natural part of visual communication, the participants were divided into two groups based on their preference to explore either good or malicious use cases of synthetic media. As Bob Ross used to say: “You need the dark, in order to show the light”—so we also tried to place the dark right next to the light to be able to highlight the positive and creative potential of GANs.

Quite some peculiar scenarios were created during the brainstorming session. The group of “bad guys” focused on malicious uses of synthetic images, such as fake eBay advertisements with generated text and visuals, “Missing cat” posters with StyleGAN-ed cat photos, or going as far as discussing faked terrorist executions of face-swapped celebrities to get a ransom. The “good guys” saw potential, for example, in letting AI re-interpret the satellite imagery of Google Maps to show what our cities would look like if we don’t address climate change right now or thought of an image analysis which would help diagnose a skin rash from a photo, or a speculative idea of bringing Jesus back to life. Remarkably, many ideas were somehow balancing on the edge between good and evil—or rather ideas that we couldn’t clearly label as being genuinely good or absolutely malicious. Maybe it is the novelty of synthetic media generated by neural networks that fuel our inability to apply current moral criteria to them.

Strange Attractions by Lenka Hamosova

Strange Attractions by Lenka Hamosova

Since HDSA2019, we have facilitated multiple similar brainstorming sessions on the future scenarios of synthetic media, and the outcomes continue to be very ambivalent. The ethics of using AI-generated media in daily life, of course, need to be still defined, but engaging in speculative discussions at this moment seems to already be giving some hints on how problematic this will be. It’s fascinating to see there is a tendency to think about the dark scenarios first. This might either be caused by fear or by romanticizing dystopian futures. However, when we asked participants to map their future scenario on a chart with a matrix defined by the good and the bad, the large scale and small scale extrapolations—the dark scenarios prove to exist mostly in the large scale context. Nobody seems to be interested in thinking about the malicious use of synthetic media on a small or personal level—at least not during the workshop brainstorming. Also, similarly to the HDSA2019 experiment, many scenarios are staying close to the middle of the good/evil axis. As if every good thought might bring harmful consequences and vice versa.

After the brainstorming session, we introduced the available tools for producing synthetic visuals to play with, such as Runway ML and Google Colab. A nice coincidence was that just a few days before the workshop, Runway ML introduced a new feature we were all waiting for: chaining the models. This allows us to directly connect an output of one model as an input to another model, therefore executing several steps at once. While using Google Colab requires a certain level of proficiency in Python, Runway ML is a tool that lets you experiment with machine learning models instantly without any coding. That’s not only making machine learning more accessible to a broader audience, but it’s also rapidly speeding up the process of prototyping spontaneous workshop-triggered ideas, with immediate satisfaction from the almost instant visual output.

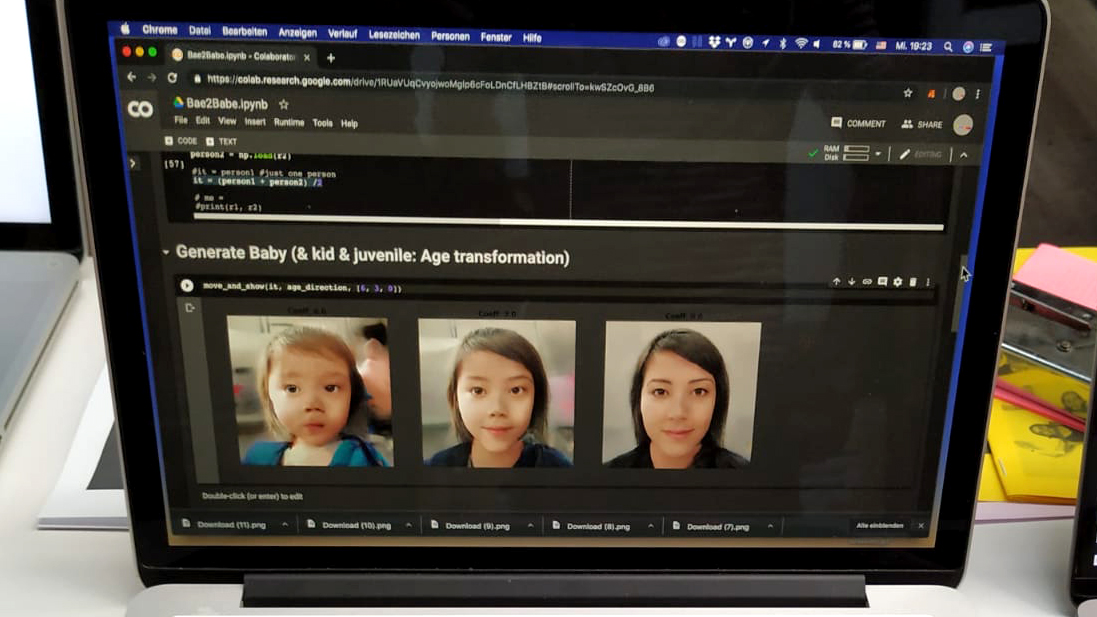

The workshop’s goal was to produce real prototypes for the previously brainstormed future scenarios of applied synthetic media over the course of three hours. That’s quite a short time, and definitely not enough for more complex processes involving training a dataset, etc. Playing with Runway ML or Google Colab enabled faster turnaround of visuals and made the conceptualization of speculative scenarios much easier. The following examples show the range of imagined plots and tools used for experimentation: Google Colab enabled the opportunity to play with generating faces [[ https://github.com/NVlabs/stylegan%7C(StyleGAN-Encoder + StyleGAN)]] and naturally led to an idea for the “Bae2Babe App”—the concept of an app that would envision how the child of two (or even more) people might look like. Jonas Bohatsch engaged in showing us random combinations of workshop participants’ facial values resulting in a toddler’s face. Imagine this being a feature of dating apps such as Tinder!

Bae2Babe App by Jonas Bohatsch

Bae2Babe App by Jonas Bohatsch

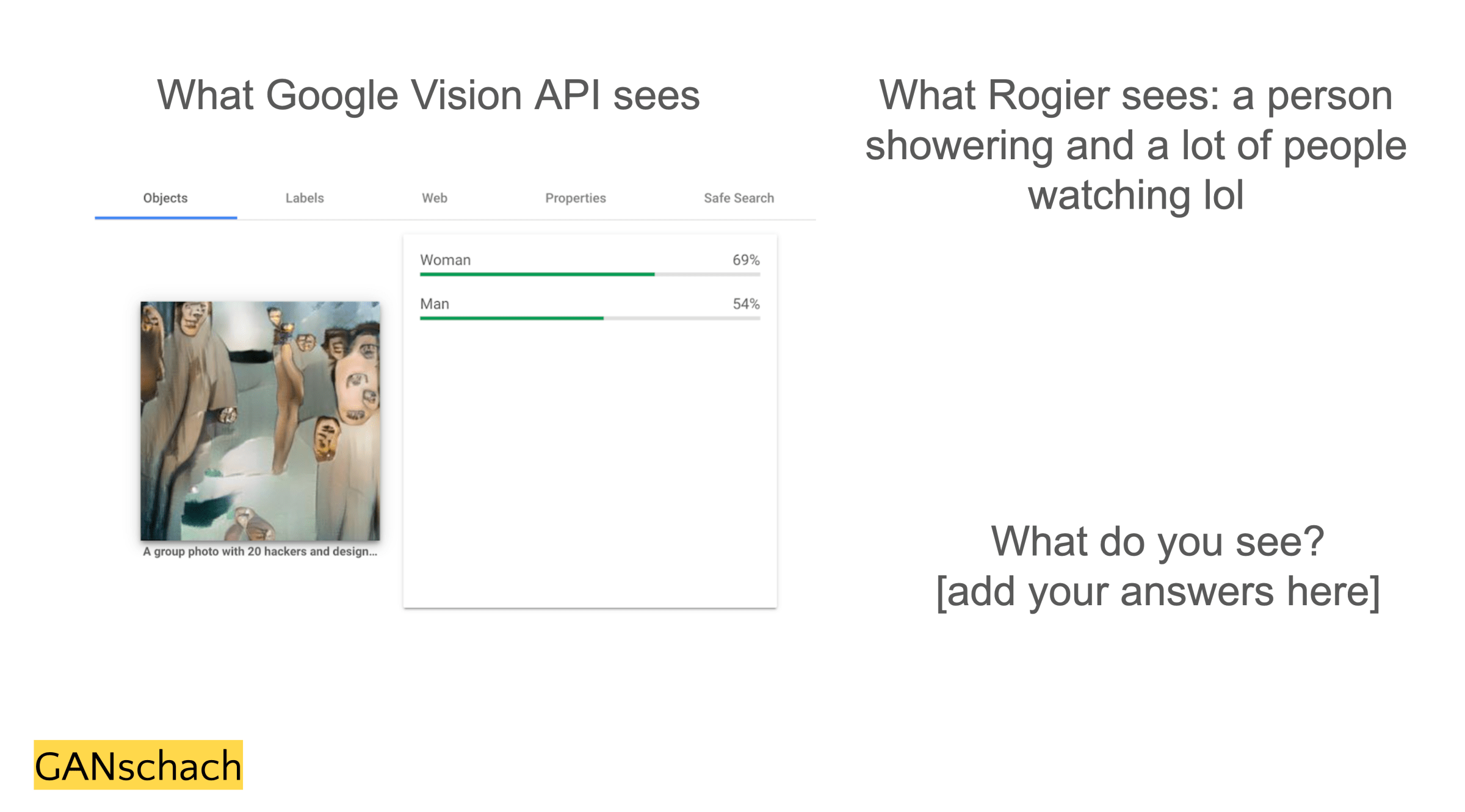

Similarly to what the Rorschach inkblots can tell us about our unconscious personality’s parts and emotional functioning, computer vision combined with generating images based on words, could do that as well. In light of this, Nadia Piet created “GANschach,” a set of AttnGAN-generated images that are used to measure the unconscious parts of human and machine perception and association. The process is based on combining AttnGAN (in Runway) with Google Cloud Vision API and comparing the results with what people saw in the image. Nadia ends her project presentation with a question: “Did they just create their own encrypted language that I/we cannot understand?”

GANschach by Nadia Piet

The models that were able to describe what is in the image and generate an image based on words triggered notable attention from participants. Together with generated selfies and gender-swaps, it falls in the category of the must-try and generated initial excitement from seeing the first few outputs.

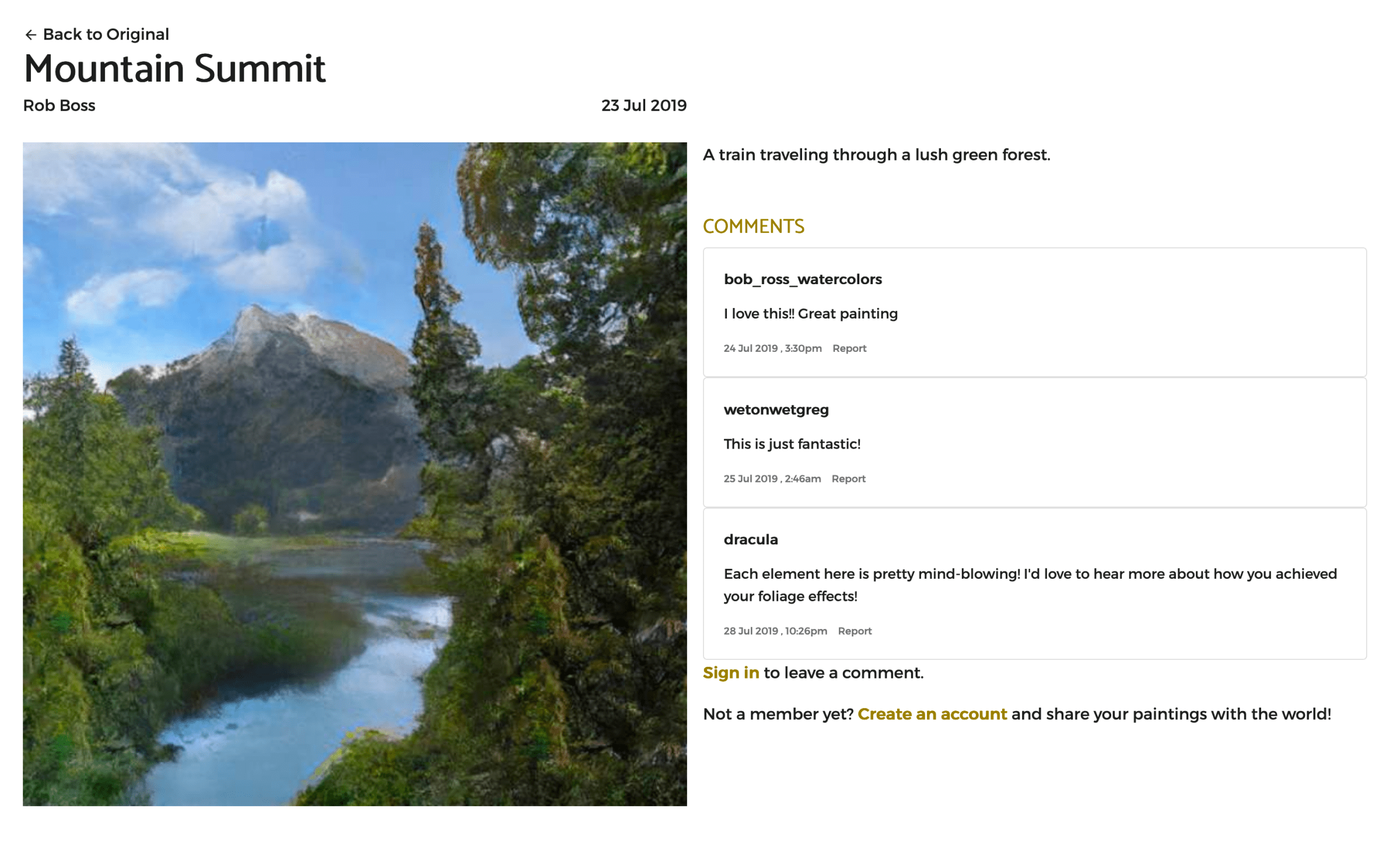

Bob Ross was a huge inspiration for this workshop, and he also deserved a lovely tribute in the form of a conceptual project by Selby Gildemacher. Selby let the neural networks re-interpret several Bob Ross paintings, combining the magic of SPADE and im2txt models. Through the process of creating a segmentation map from the original picture and generating a synthetic one from the map, the neural networks were actually painting according to Bob Ross’s tutorial (and therefore unknowingly participating in the Joy of Painting). The generated paintings were uploaded to the Two Inch Brush database, a website where Bob Ross fans upload their own versions of his paintings, under the user account called Rob Boss (2020 update: the account got banned and the paintings were deleted). It’s a synthetic masterpiece, consistent in every step—including the generated descriptions of the paintings, and even the description of Rob Boss himself! Soon enough, the Internet noticed the new member of the community, and the first comments showed an appreciation for the nice foliage effect and brush strokes, unknowingly appreciating a neural network’s job. It opens an interesting question: where does the AI-generated fake painting stand among other fake paintings made by humans? Is it faker than the other tribute paintings? Does acknowledging the synthetic origin of these paintings even matter?

Rob Boss by Selby

Rob Boss by Selby

Synthetic media will soon be a natural part of our communication, co-existing alongside media made the “old-fashioned” way, with many of us probably not even aware of it at all. Exposing ourselves to this idea in advance, and experimenting with conflicting scenarios like these, brings a necessary input in the AI ethics debate. Some situations seem less scary once we expose ourselves to them. Others might surprise us with their unexpected biases and strike us to the point where we might want to change them and have our say—for example, in the way datasets are produced. Some questions might remain hard to answer still at this point. But knowing how AI-driven generative tools work is the first step in transitioning from the passive role of being a powerless information consumer towards becoming a self-reliant knowledgeable contributor to the synthetic future.

--

This workshop led to other subsequent workshops and lectures on this topic and transformed into a participatory project called Collective Vision of Synthetic Reality. This project aims to collect and analyze the ideas, scenarios, absurd dreams, hopes, and fears concerning the emergence of AI-generated synthetic media based on crowd-sourcing a broad scope of visions from a diverse audience. The collected database represents the average values of participants, the inclination towards utopian or dystopian thinking about the near future, the specific future scenarios of use cases for synthetic media, as well as the “recipes” for their generation.

More information on https://syntheticreality.hamosova.com

--

Our recent StyleGAN experiments:

Strange Attractions

"Strange Attractions is when your outlook on life changes, that it may seem to your cognitively dimming self, that there are some awesome things happening around the world. That seems to be just about the safest thought that you can have. If the safest possible thought is also the right or most interesting one to be having, that's how you can get excited about flying over the Great Barrier Reef, finding Earth's oldest fossils on an old underwater mountain in Montana or looking at the newly discovered habitability of a deep, hot lake on Mars.

If you find that you can't find excitement for the same reasons you used to, then we must stop being ambivalent about that. You can't help being attracted to danger if you do not like the environment."[2]

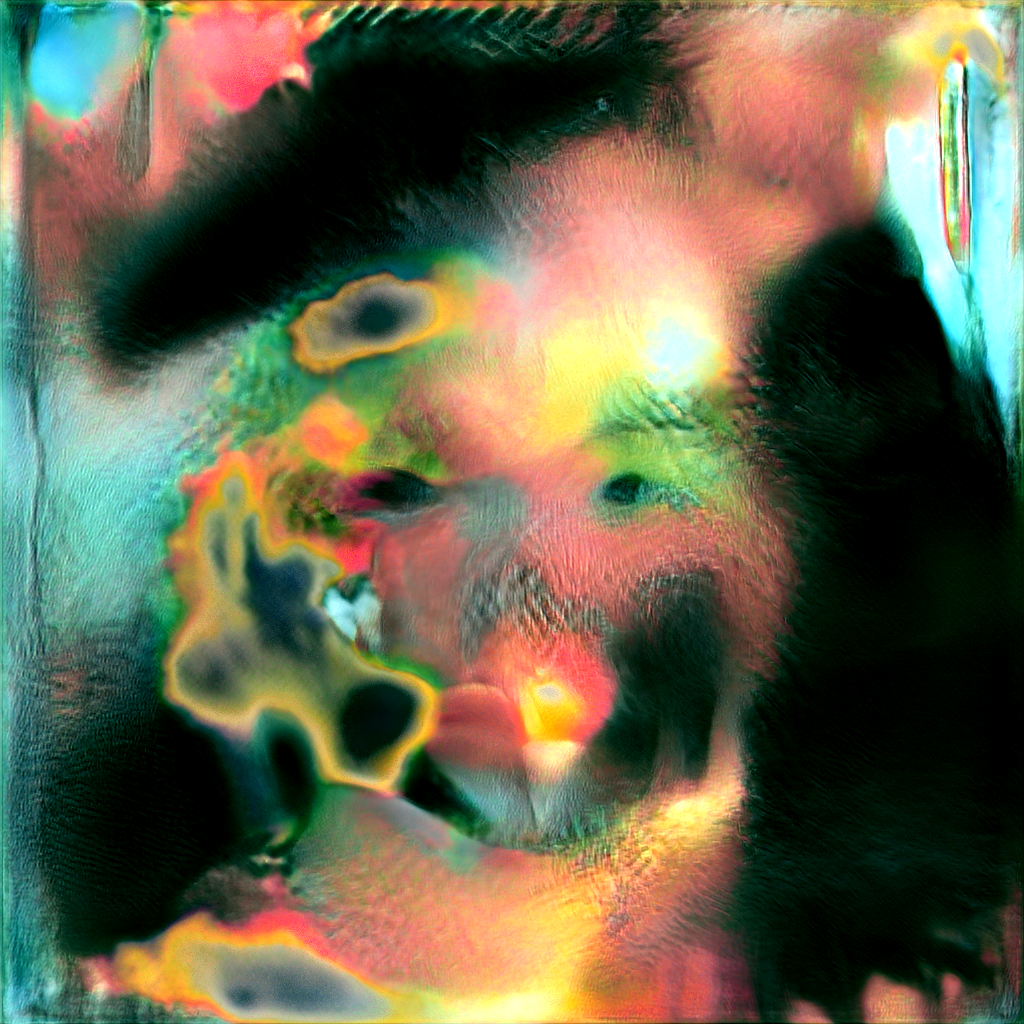

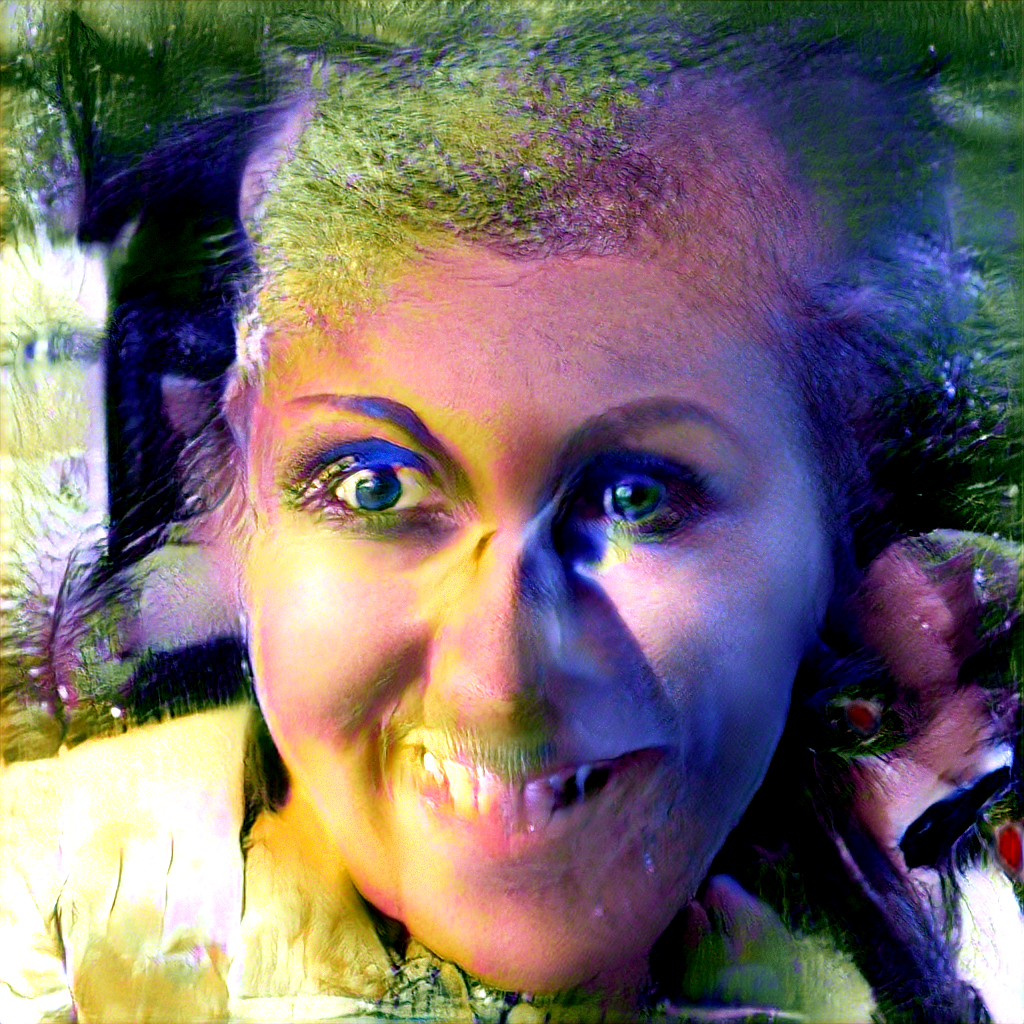

We generated thousands of uncanny looking, but beautiful human faces from StyleGAN ︎. These faces are results of randomized vector inputs and definitely not the kind of use StyleGAN was intended for. However, taking an unexpected turn and choosing a different pathway is what usually brings new ideas. We could be imagining that this is what it looks like inside the StyleGAN’s dreams. The interrupted process of imagining a face. From more abstract to more concrete, these faces are a surprisingly colorful look inside face synthesis and come with a mesmerizing aesthetic. As a tribute to the website thispersondoesnotexist.com, ︎ we launched strangeattractions.xyz ︎, where you can generate one of these faces each time you click/refresh.

- Tutorial: https://rusnak.io/strange-attractions/

- More: https://hamosova.com/Collective-Vision-of-Synthetic-Reality

Strange Attractions IG face filter

- Instagram face filter that puts Strange Attractions on your face. Creepy AF.

- Search IG filters by name: Strange Attractions

- or find it on my profile: https://www.instagram.com/lenkahamosova/

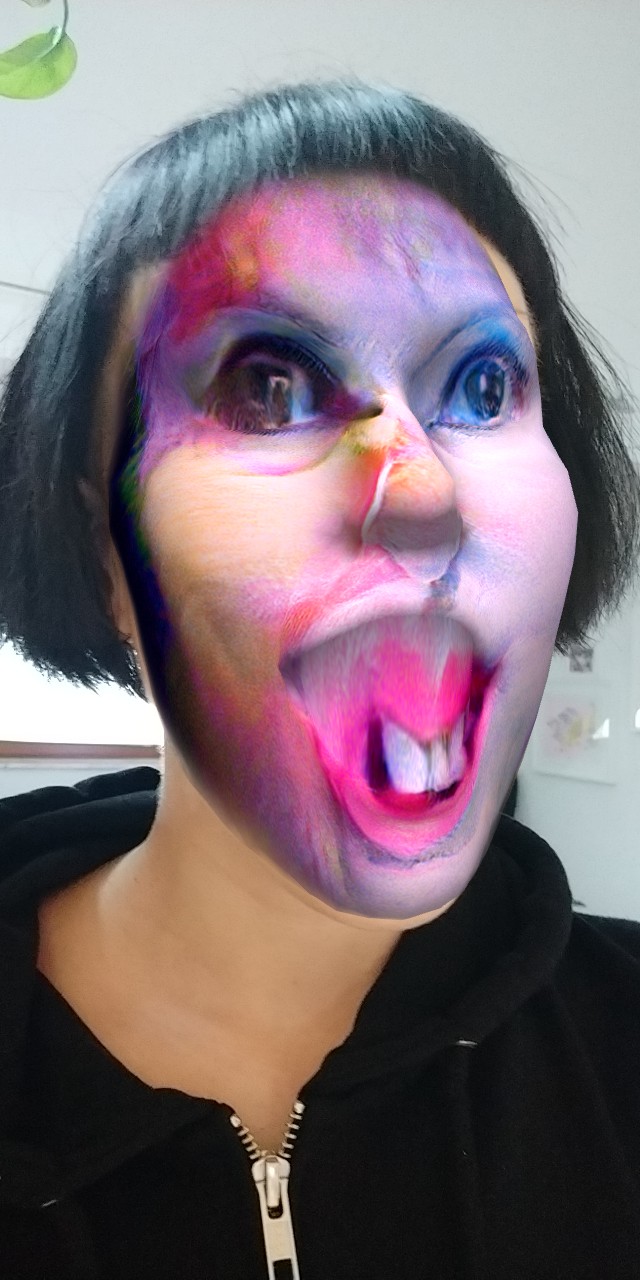

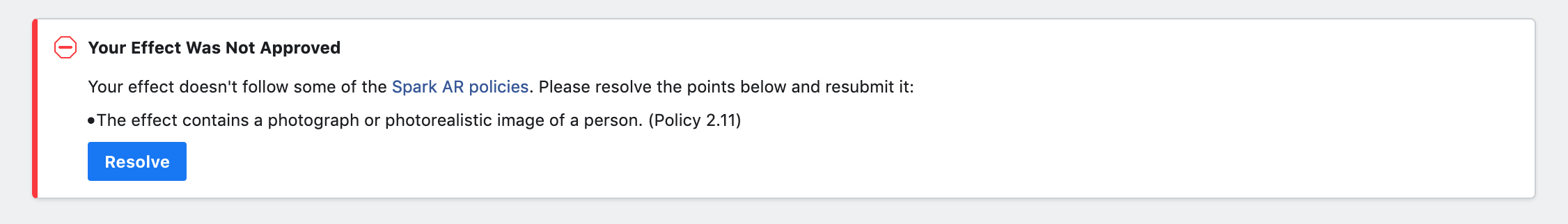

StyleGAN Anonymizer IG face filter

This filter was designed as an anonymization tool. It replaces one’s face with a synthetic (AI-generated) face to maintain privacy. It could have been handy during events with a strict photo policy, but it did not make it through the approval process. Apparently, it contains “a photograph or photorealistic image of a person” (violation of Spark AR/Instagram Policy 2.11). Except that it doesn’t. The faces in this effect are all genuinely generated by StyleGAN; they don’t belong to any human being, but yes, we agree—it is definitely an unsettling experience to try it on your face.

-

-

notes

1. Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A. and Bengio, Y. (2014). Generative Adversarial Nets. [online] Available at: https://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf.

2. Text generated with model called GPT-2 that generates coherent paragraphs of text one word at a time.https://talktotransformer.com/

https://hamosova.com/ https://rusnak.io/

This text was published in Coded Bodies Publication in 2020